Maaz Anjum's Blog

A life yet to be lived!

Wednesday, April 9, 2014

VirtualBox, NAT, and Port Forwarding

At Bobby’s (@dbasolved) insistence, I decided to write this post because it might prove useful for some folks. He mentioned following a note by Jeff Smith (@thatjeffsmith) that he is unable to locate at this time. If you are a VirtualBox user, you obviously have a need to maintain multiple Virtual Machines. You would also need to setup a type for one (or more) of its network adaptors. Let’s keep going here, I promise there’s a point to this :) Below is a list of your options:

By default, the NAT option for the first adaptor is selected. NAT = Network Address Translation and will basically let the Guest VM share the same network as your Host (laptop/desktop_ where you can check emails or browse the internet. Chapter 6 of the VBox Documentation here will explain the other options. Without going into too many details about the other options, if you really knew what you were doing and how you’d like your network to be configured then most likely NAT will not be your choice. Myself, I prefer to use Bridged Adaptor which effectively assigned an IP address within the VBox network and I can easily SSH or SQLPlus onto the VM.

This post is aimed to discuss network connectivity to a Guest VM while using NAT using Port Forwarding. I use OSX so my screenshots and menu options might be in a slightly different location than on Windows, however the steps are pretty much the same. Also note that the VM can remain powered on during the setup steps below.

Si Comencia!

1. Navigate to the Settings menu for the VM in question.

2. Click on the “Network Tab”.

3. Assuming your adaptor is enable and configured for NAT, click on the “Advanced” section.

4. Now, the key here is to setup Port Forwarding.

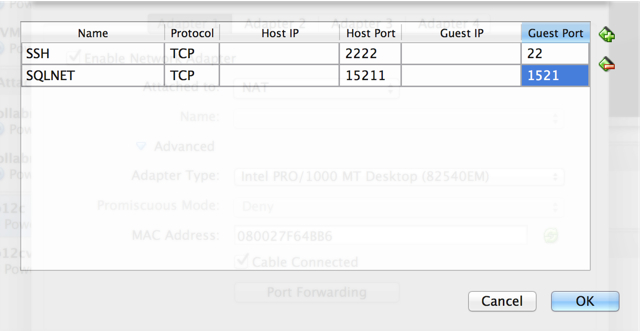

5. Port Forwarding is controlled via “Rules”. These can be configured for TCP and/or UDP protocols. In this example, I want to setup SSH and SQLNET rules. Enter a Rule Name, Select the TCP Protocol, Host Port, and Guest Port. You’ll notice that I intentionally left the IP address sections blank, that is because the rule is generic enough that it will apply to any IP address on the adaptor. Click OK when done.

6. Similarly add a SQLNET port as well and click OK twice.

6. At this point, we are ready to test connectivity. I used the “Terminal” app on OSX, but the same can done from putty. Why am I connecting to my localhost? Well, the nature of NAT is a shared network so when a connection attempt to the port 2222 is made, it is automatically forwarded (via the VBox VM Rule) to the correct Guest VM.

theStone:~ maazanjum$ ssh -p 2222 root@localhost The authenticity of host '[localhost]:2222 ([127.0.0.1]:2222)' can't be established. RSA key fingerprint is ad:ea:6e:84:d8:2d:1e:ff:bc:69:fc:14:47:2e:67:b5. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '[localhost]:2222' (RSA) to the list of known hosts. root@localhost's password: Last login: Wed Mar 5 16:04:40 2014 from 10.0.2.2 [root@db12c ~]#

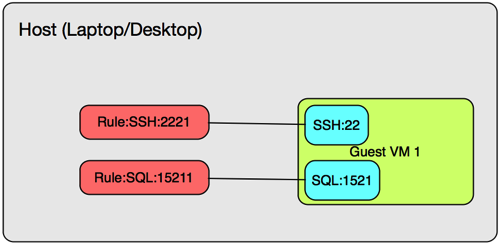

To pictorially review, we basically enabled Port Forwarding for SSH and SQL as illustrated below.

The only catch is that your rules across all Guest VM’s need to be unique. For example, if Rule:SSH:2222 points to Guest VM 1’s Port SSH:22, then for a Guest VM 2 you would likely need a Rule:SSH:2223. I know, it can get a bit confusing but come up with a port numbering scheme. I imagine that you wouldn’t set up Port Forwarding for all of your Guest VMs!

Hope this was helpful!

Cheers.

Metric Extensions in EM12c #1: Monitor a Useful Target such as a GoldenGate Instance

Back in August of 2013, I wrote a post on “Alternative Method to monitor GoldenGate from EM12c outside the GoldenGate 12.1.0.1.0 Plugin” and then back in December of 2013 I wrote another one a Metric Extension to Monitor Unsupported Database Versions. As it turns out, the first post has been quite useful in many customer sites but what it lacks is the process to actually build the Metric Extension (ME).

Note: If you are interested in more ways to monitor GoldenGate, be sure to check out my older posts, Bobby Curtis’ posts (1 & 2), and his upcoming presentation at Collaborate 14. Coincidentally, he is sitting with me on the plane ride over to #C14LV at the moment :-)

It’s important for me to share my experience and reason for not using the metrics provided with the EM12c GoldenGate plugin; I have found it to be a little inconsistent due to several reasons. Starting from the Berkley DB Datastore corruptions, to JAgent hangs, to inaccurate results on the GoldenGate homepage in EM12c, and lastly I’ve experienced unreliable alerting. The JAgent architecture was inherited from the GoldenGate Monitor days and can be roughly described by the illustration below (if this is inaccurate, I’d be more than happy to adjust the diagram below). The parts in green describe the components involved with collecting the data from the GoldenGate instance, as well as, the EM12c side. The process, at certain times, and on certain platforms (Windows) has broken from my experience and after working with Oracle Support for a while until the fixes were released with subsequent patches (11.2.1.0.X), but I still found the incident management and subsequent notifications to work unreliably.

The data flow, as illustrated below described the JAgent which connects to and stores information from the GG Objects periodically in its Datastore (dibdb directory). When the EMAgent polls for updates via the JMX port, it will do so by checking the datastore. Once the raw metric is collected within the repository, it is the EM12c incident management framework which triggers notifications.

With that being said, I’d like to pick up where I left off way back in August of last year.

I already have the output from the monitor_gg.pl script which I will invoke from my new Metric Extension. Let’s begin with a refresher on the lifecycle of an ME:

This post assumes that:

1. You have already downloaded the monitor_gg.pl script onto your host where Golden Gate instances are running.

2. You have tested the script from command line by invoking it, i.e. $ perl monitor_gg.pl and receive the output mentioned in my previous post.

Steps

1. Make your way to the Metric Extensions home page.

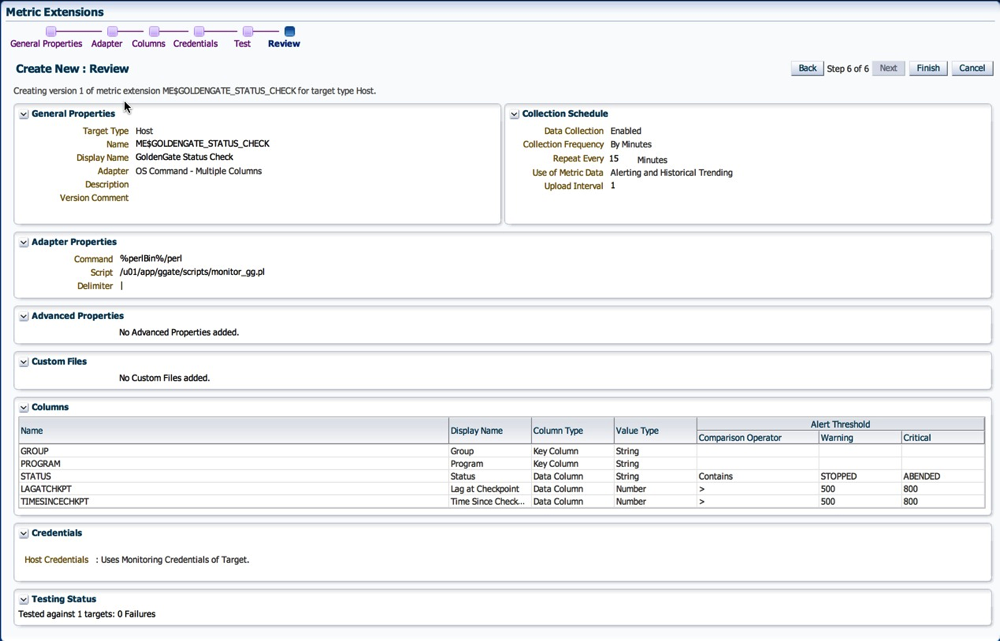

2. Click on “Create”, and enter the relevant details such as “Name”, and “Display Name”. Make sure you select the Adapter as “OS Command - Multiple Columns”. The rest you can leave at default values, or change as per your desired check frequency.

3. On the next page, enter the full path of the script in the “Command” section. Alternatively, you could also leave the “Command” section with the %perlBin%/perl and enter the absolute path of the script in the “Script” section.

4. On the next page, you need to specify the columns returned by the status check. The process is similar to what I mentioned in my previous post Metric Extension to Monitor Unsupported Database Versions, so I will quickly skim through the important bits.

It is important to note that I specified this and the following column as Key Columns. This is because the result set in the ME framework requires unique identifiers.

5. The next column represents the actual program name, i.e. Extract, Replicat, Manager etc.

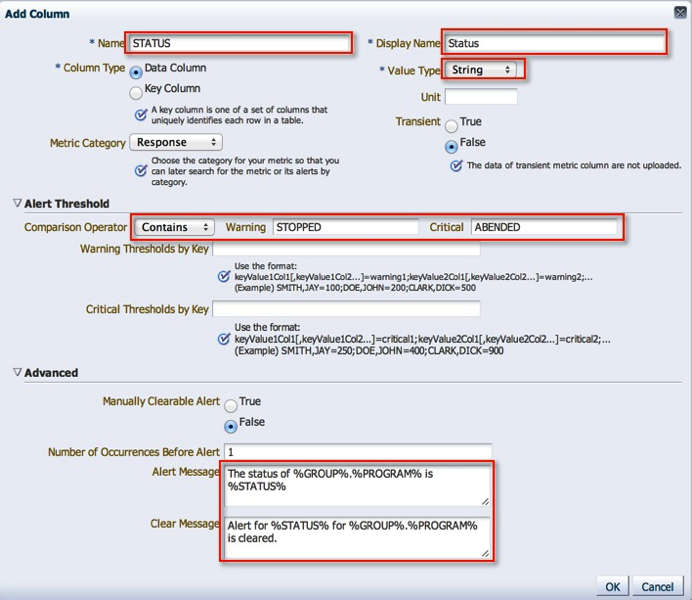

6. Status is an important column because we can use it to trigger state alerts. Note, that I have specified the Warning and Critical thresholds, alert and clear messages. Its quite cool how customizable the framework can be.

7. Next, we have the Lag at Checkpoint, a column which we will use for Alerting. Note, that I have specified the Warning and Critical thresholds, alert and clear messages.

7. Time Since Last Checkpoint is set up in the same manner as the previous column.

8. With that, we are done with the column configuration.

9. I leave the default monitoring credentials in place, however if you are running GoldenGate as user other than the “oracle” user, you will have to either a) create a new monitoring credential set or b) grant the oracle user execute on the monitoring script.

10. We’re coming to the end now. On the next screen, we can actually see this metric in action by running it against a target.

11. Next, we review our settings and save the Metric Extension.

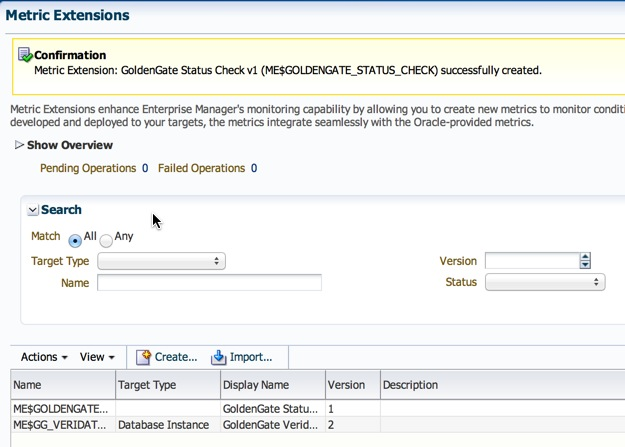

12. Now, back on the ME home page, the metric is in Editable Stage.

13. We simply need to save it as a “Deployable Draft” or a “Published” extension. The former state allows for deployments to individual targets, where as the latter is required for deployments to Monitoring Templates.

14. Follow steps listed under section 10 on my post on creation of metric extensions to deploy the ME.

Once deployed, the metric is collected at the intervals specified in step 2. Depending on how your incident rule sets are configured, you will most likely start receiving alerting once the thresholds we defined above are crossed.

I do have some lessons learned to add to the above posts from an Incident Management perspective, but that will have to be a completely different post :-)

Hope this helps.

Cheers!

Sunday, March 30, 2014

ggsci: error while loading shared libraries: libnnz11.so: wrong ELF class: ELFCLASS32

I know what some of you might be thinking, is this an Elf Class from Word of Warcraft? Not quite. Coincidentally, when you google imagines for “elf world of warcraft”, you get mostly female ones :)

Over the last couple of weeks, I spent a few hours across several sessions explaining and demonstrating a GoldenGate installation and configuration. The team had provided me two Enterprise Linux servers (source and target) where the oracle database software was already installed as well as database instances running.

The environment configuration was:

Source Server: OEL 4.9 32bit

Source Database: Oracle 11.2.0.4 32bit

GoldenGate Binaries: 11.2.1.0.X 32bit

Target: OEL 6.5 64bit

Target Database: Oracle 11.2.0.4 64bit (I assumed)

GoldenGate Binaries: 11.2.1.0.X 64bit

The GoldenGate (11.2.1.0.X) installation on the source was successful, however, on the target side after I unzipped the GoldenGate binaries and executed ggsci I received the nasty error message below:

-bash-4.1$ ./ggsci ./ggsci: error while loading shared libraries: libnnz11.so: wrong ELF class: ELFCLASS32

Interesting. I was used to received the “error while loading shared libraries” which means that 32bit library file location is not in the LD_LIBRARY_PATH. I was still unable to get GGSCI to work. After a lot of toiling around with .bash_profile settings and looking through various MOS notes, I decided to give it a rest until the next day.

Come the next day, it dawned on me that since I did not setup this target environment, is it possible that my assumption about the 64bit Oracle Database binaries was incorrect? The team I was mentoring had told me that this was a sandbox environment, so it was quite likely that someone installed 32bit Oracle Database binaries.

I checked the banner for the “bad” oracle home.

-bash-4.1$ echo $ORACLE_HOME

/u01/11gr2/app/oracle/product/11.2.0/db_1

-bash-4.1$ echo $ORACLE_SID

test1

-bash-4.1$ sqlplus / as sysdba

SQL*Plus: Release 11.2.0.4.0 Production on Wed Mar 26 20:30:10 2014

Copyright (c) 1982, 2013, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - Production

With the Partitioning, OLAP, Data Mining and Real Application Testing options

SQL>

Maybe there was something wrong with the installation to begin with, so I Installed a new Oracle 11.2.0.4. Home and checked it’s banner:

-bash-4.1$ echo $ORACLE_HOME

/u01/11gr2/app/oracle/product/11.2.0/db_2

-bash-4.1$ echo $ORACLE_SID

target01

-bash-4.1$ sqlplus / as sysdba

SQL*Plus: Release 11.2.0.4.0 Production on Wed Mar 26 20:30:59 2014

Copyright (c) 1982, 2013, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production

With the Partitioning, OLAP, Data Mining and Real Application Testing options

SQL>

It wasn’t too surprising that it was exactly the problem. To verify, I started ggsci from the new home and presto it worked!

The point here is, do not take anything for granted. If I had simply gone through my checklist to validate the environment(s), then I could have saved myself a lot of time and effort. And of course, the reason why there were no moss notes directly related to the error message is likely because the support engineers assume that you’ve read the documentation and validated the environment before hand.

Hope this helps.

Cheers.

Tuesday, March 18, 2014

Create a Standby Database using EM12c

I wanted to make this a quick post, because a friend was mentioning a slight problem during a manual set up of Data Guard. I told him you could do it through EM, and then recalled that I had documented it a while back. After the tube light finally went on in my head, it occurred to me that this would a good post.

When leveraging EM to set up, monitor, and manage (Active) Data Guard, Data Guard Broker is configured by default. A quote from Oracle Docs:

"Oracle Data Guard ensures high availability, data protection, and disaster recovery for enterprise data. Data Guard provides a comprehensive set of services that create, maintain, manage, and monitor one or more standby databases to enable production Oracle databases to survive disasters and data corruptions. Data Guard maintains these standby databases as transactionally consistent copies of the primary database. If the primary database becomes unavailable because of a planned or an unplanned outage, Data Guard can switch any standby database to the production role, thus minimizing the downtime associated with the outage. Data Guard can be used with traditional backup, recovery, and cluster techniques, as well as the Flashback Database feature to provide a high level of data protection and data availability.”

Whether or not you chose to set up a different network/listener for the Data Guard traffic is entirely your choice (and a good one in my opinion). However, in this scenario, I simply used the default parameters to illustrate a point.

1. Navigate to the particular database’s home page.

2.On the database home page, click on “Avaliability”->”Add Standby Database”.

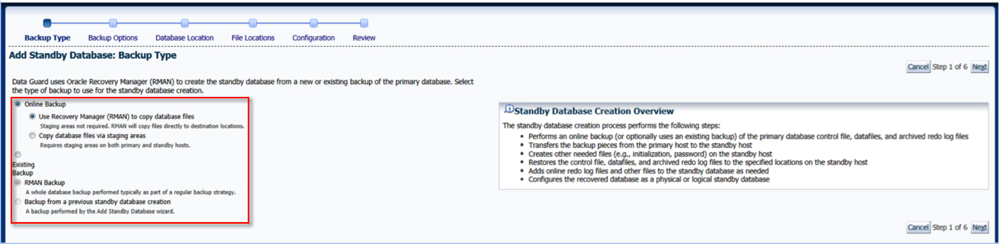

3. On the next screen, click the first radio button and “Continue”. We will create a new “Physical Standby” database.

I will explore this section further after Data Guard is setup.

4. We can leverage the Duplicate from Active Database feature in 11g, therefore, leave default values and click “Continue”.

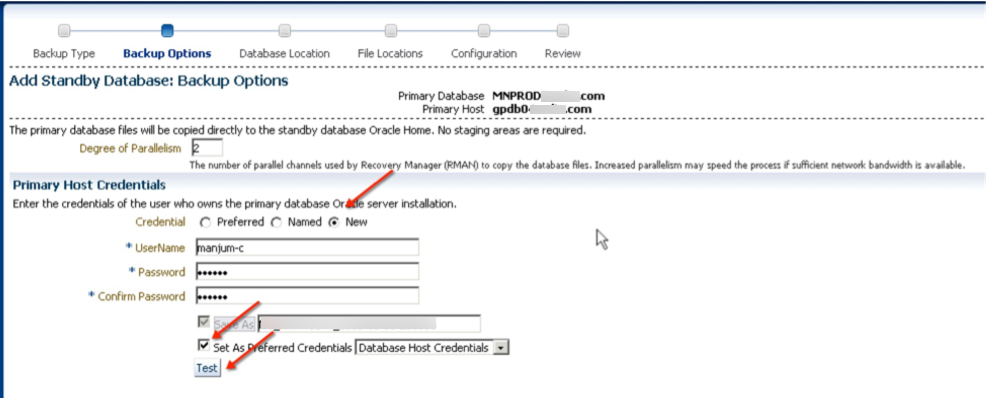

5. Either create a new credential set or use an existing one.

6. Next, we select which host the standby instance will be created on.

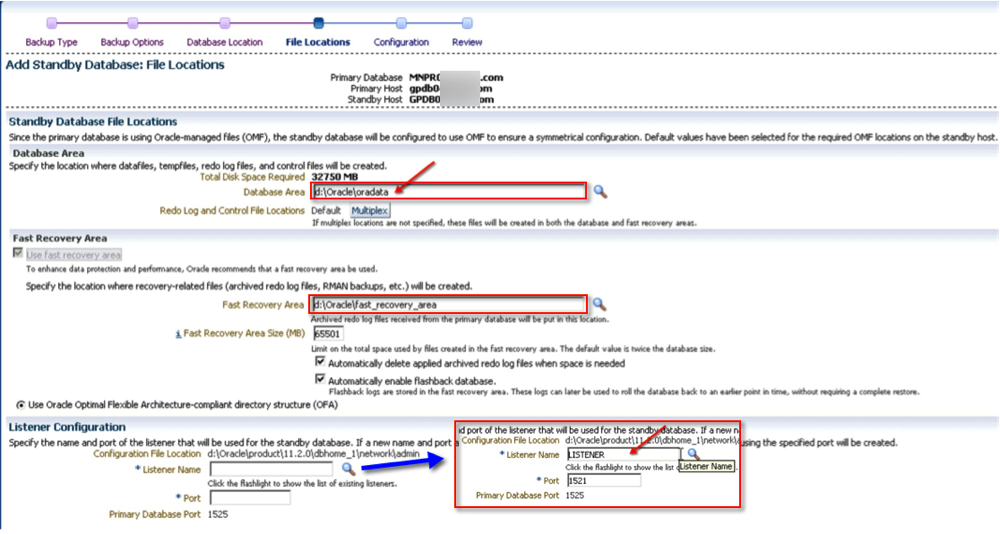

7. At this point, we can chose the relevant file locations. You have the option to change them compared to the primary database if needed.

8. Time to give the new guy a name. You could optionally decide to monitor it as well (a good idea in my opinion). Should you also want to use a different connect net services identifier other than what EM uses, now is a good time to do that.

9. Finally, review your settings and click “Finish”.

10. As with most “tasks” in EM, a job is submitted which can be reviewed.

10.1 Job details.

One complete, the new standby database is configured and ready! In addition to EM, you can also verify the status via Data Guard Brokers interface. By the way, the output below is from a different system.

[oracle@server01 ~]$ dgmgrl /

DGMGRL for Linux: Version 11.2.0.3.0 - 64bit Production

Copyright (c) 2000, 2009, Oracle. All rights reserved.

Welcome to DGMGRL, type "help" for information.

Connected.

DGMGRL> show configuration

Configuration - prim_db.global.name

Protection Mode: MaxPerformance

Databases:

prim_db - Primary database

stand_db - Physical standby database

Fast-Start Failover: DISABLED

Configuration Status:

SUCCESS

DGMGRL>

The funny thing about Data Guard, at least from my experience, is that there’s no two same configurations. For some reason or the other, usually related to network configuration, or one-off requirements which cause the difference. If you follow the steps above, then at the very least you will have a standardized way to deploy standby databases.

Hope this helps!

Cheers.

Monday, March 17, 2014

#em12c Metrics - Part 1: An Introduction

From the middle of 2013, I’d been busy in preparation for one of my presentations for IOUG’s Collaborate 14 Conference in Las Vegas. It is on Capacity Planning Enterprise Manager 12c’s Metrics (available on slideshare), one which I had the honor of presenting earlier at a Georgia Oracle User Group meeting in Atlanta, GA this past week. Metrics in any version of Enterprise Manager are collected (via the Enterprise Manager agents) and stored in its repository database, to be used for rendering historical viewing, but only at each targets respective home page. With EM12c, the list of monitored targets has grown to a staggering amount, especially with the advent of Extensibility Exchange and Metric Extensions (previously known as User Defined Metrics).

From Oracle Databases, multiple Unix Platforms, various types of Middleware products, Oracle VM, The Oracle Cloud, Engineered Systems such as Exadata, Exalogic, and the Big Data Appliance, and many other targets, this tool sure does cover a wide spectrum with an even wider range of metrics (or insights) into each managed target. In addition, using Plug-ins developed either by Oracle or by third party vendors, external hardware/software monitoring is also possible on technology such as VMware, NetApp, Cisco, Brocade, HP Storage, EMC Storage, F5 Load Balancers, and like wise many others. All of this monitored data is indeed stored somewhere, and as I have mentioned earlier, it is simply kept in the Enterprise Managers repository.

Starting with this post, I’d like to begin a series that discusses the various parts and pieces associated with Metrics in Enterprise Manager 12c.

I have already established that data is collected from managed/monitored targets, but have yet to explain the delicate intricacies of that collection. By default, once a target is discovered and promoted in EM12c, the collection of certain metrics that are enabled on a collection schedule. Both of which depend on the target type. As an example, lets take a look at an “Oracle Database”. Each time one is added to the EM inventory, we automatically assume that information regarding its configuration, status, etc will be displayed. That is precisely the kind of “default collection of metrics on a schedule” that I mentioned earlier.

So, how does the data get to the repository? One way to look at it, and some of depictions are straight out of my presentation, is that data from targets is collected by the EM Agents, and pulled into the Management Repository. This is a big shift from the previous releases of Enterprise Manager because they employed the push method (from agents) as opposed to a pull method from the Management Server.

The data lands in the em_metric_value table which contains the “raw” data. A quick look at this table’s structure and data reveals the rawness of the information that is collected.

desc em_metric_values Name Null Type --------------- -------- ----------------------- METRIC_ITEM_ID NOT NULL NUMBER(38) COLLECTION_TIME NOT NULL DATE MET_VALUES NOT NULL EM_METRIC_VALUE_ARRAY()

col metric_item_id format 9999999 heading "Metric Item ID"

col collection_time format a25 heading "Collection Time"

col met_values format a100 heading "Metric Values"

select metric_item_id

,collection_time

,met_values

from em_metric_values

where rownum < 11; -- Only used to restrict the data returned.

Metric Item ID Collection Time Metric Values

-------------- ------------------------- ----------------------------------------------------------------------------------------------------

1561578 13-FEB-14 12.13.46 AM SYSMAN.EM_METRIC_VALUE_ARRAY(null,null,0,0.209,null,0,72.899,null,null,16,0,null,0.018)

1561578 13-FEB-14 12.28.46 AM SYSMAN.EM_METRIC_VALUE_ARRAY(null,null,0,0.191,null,0,68.343,null,null,15,0,null,0.017)

1561578 13-FEB-14 12.43.46 AM SYSMAN.EM_METRIC_VALUE_ARRAY(null,null,0,3.604,null,0,651623.938,null,null,2310,0,null,2.57)

1561578 13-FEB-14 12.58.46 AM SYSMAN.EM_METRIC_VALUE_ARRAY(null,null,0,0.187,null,0,68.343,null,null,15,0,null,0.017)

1561578 13-FEB-14 01.13.46 AM SYSMAN.EM_METRIC_VALUE_ARRAY(null,null,0,0.206,null,0,68.343,null,null,15,0,null,0.017)

1561578 13-FEB-14 01.28.46 AM SYSMAN.EM_METRIC_VALUE_ARRAY(null,null,0,0.184,null,0,68.343,null,null,15,0,null,0.017)

1561578 13-FEB-14 01.43.46 AM SYSMAN.EM_METRIC_VALUE_ARRAY(null,null,0,4.53,null,0,958473.112,null,null,3347,0,null,3.723)

1561578 13-FEB-14 01.58.46 AM SYSMAN.EM_METRIC_VALUE_ARRAY(null,null,0,0.195,null,0,68.343,null,null,15,0,null,0.017)

1561578 13-FEB-14 02.13.46 AM SYSMAN.EM_METRIC_VALUE_ARRAY(null,null,0,0.191,null,0,72.899,null,null,16,0,null,0.018)

1561578 13-FEB-14 02.28.46 AM SYSMAN.EM_METRIC_VALUE_ARRAY(null,null,0,0.19,null,0,63.786,null,null,14,0,null,0.016)

10 rows selected

At regular intervals, this table’s data is aggregated into hourly and daily metric values. The corresponding tables are em_metric_values_hourly and em_metric_values_daily.

To ensure adequate performance, all three tables are partitioned as per the chart below. More information regarding the partitioning strategy can be found in “12c Cloud Control Repository: How to Modify the Default Retention and Purging Policies for Metric Data? (Doc ID 1405036.1)”.

Now, I probably know what you are thinking. If I query the raw data, then what good is it to me in the above format. To understand and view the data coherently, the mgmt$metric_values, mgmt$metric_values_hourtly, mgmt$metric_values_daily OR gc$metric_values, gc$metric_values_hourly, gc$metric_values_daily views which are compliments of the tables mentioned earlier.

You might have seen various queries that use the mgmt$ tables, but from what I seen the gc$ tables are newer versions with slightly different metric column names and labels.

Let’s take a quick look at the gc$metric_values and its contents.

desc gc$metric_values Name Null Type ------------------------- -------- ------------- ENTITY_TYPE NOT NULL VARCHAR2(64) ENTITY_NAME NOT NULL VARCHAR2(256) TYPE_META_VER NOT NULL VARCHAR2(8) METRIC_GROUP_NAME NOT NULL VARCHAR2(64) METRIC_COLUMN_NAME NOT NULL VARCHAR2(64) COLUMN_TYPE NOT NULL NUMBER(1) COLUMN_INDEX NOT NULL NUMBER(3) DATA_COLUMN_TYPE NOT NULL NUMBER(2) METRIC_GROUP_ID NOT NULL NUMBER(38) METRIC_GROUP_LABEL VARCHAR2(64) METRIC_GROUP_LABEL_NLSID VARCHAR2(64) METRIC_COLUMN_ID NOT NULL NUMBER(38) METRIC_COLUMN_LABEL VARCHAR2(64) METRIC_COLUMN_LABEL_NLSID VARCHAR2(64) DESCRIPTION VARCHAR2(128) SHORT_NAME VARCHAR2(40) UNIT VARCHAR2(32) IS_FOR_SUMMARY NUMBER IS_STATEFUL NUMBER NON_THRESHOLDED_ALERTS NUMBER METRIC_KEY_ID NOT NULL NUMBER(38) KEY_PART_1 NOT NULL VARCHAR2(256) KEY_PART_2 NOT NULL VARCHAR2(256) KEY_PART_3 NOT NULL VARCHAR2(256) KEY_PART_4 NOT NULL VARCHAR2(256) KEY_PART_5 NOT NULL VARCHAR2(256) KEY_PART_6 NOT NULL VARCHAR2(256) KEY_PART_7 NOT NULL VARCHAR2(256) COLLECTION_TIME NOT NULL DATE COLLECTION_TIME_UTC DATE VALUE NUMBER

col entity_type format a10 heading "Entity|Type"

col entity_name format a25 heading "Entity|Name"

col metric_group_label format a7 heading "Metric|Group|Label"

col metric_group_name format a14 heading "Metric|Group|Name"

col metric_column_label format a50 heading "Metric|Column|Label"

col metric_column_name format a14 heading "Metric|Column|Name"

col short_name format a15 heading "Short|Name"

col value format 99.99 heading "Value"

select entity_type

,entity_name

,metric_group_name

,metric_column_name

,metric_group_label

,metric_column_label

,short_name

,collection_time

,value

from gc$metric_values

where rownum < 11; -- Only used to restrict rows returned.

Metric Metric Metric Metric

Entity Entity Group Column Group Column Short

Type Name Name Name Label Label Name Collection Time Value

---------- ------------------------- -------------- -------------- ------- -------------------------------------------------- --------------- ------------------------- ------

host server01.planet.net Load cpuLoad_1min Load Run Queue Length (1 minute average,per core) CPU Load (1min) 13-FEB-14 12.01.56 AM 4.08

host server01.planet.net Load cpuLoad_1min Load Run Queue Length (1 minute average,per core) CPU Load (1min) 13-FEB-14 12.06.56 AM 4.11

host server01.planet.net Load cpuLoad_1min Load Run Queue Length (1 minute average,per core) CPU Load (1min) 13-FEB-14 12.11.56 AM 4.03

host server01.planet.net Load cpuLoad_1min Load Run Queue Length (1 minute average,per core) CPU Load (1min) 13-FEB-14 12.16.56 AM 4.03

host server01.planet.net Load cpuLoad_1min Load Run Queue Length (1 minute average,per core) CPU Load (1min) 13-FEB-14 12.21.56 AM 4.01

host server01.planet.net Load cpuLoad_1min Load Run Queue Length (1 minute average,per core) CPU Load (1min) 13-FEB-14 12.26.56 AM 4.00

host server01.planet.net Load cpuLoad_1min Load Run Queue Length (1 minute average,per core) CPU Load (1min) 13-FEB-14 12.31.56 AM 4.01

host server01.planet.net Load cpuLoad_1min Load Run Queue Length (1 minute average,per core) CPU Load (1min) 13-FEB-14 12.36.56 AM 4.11

host server01.planet.net Load cpuLoad_1min Load Run Queue Length (1 minute average,per core) CPU Load (1min) 13-FEB-14 12.41.56 AM 4.01

host server01.planet.net Load cpuLoad_1min Load Run Queue Length (1 minute average,per core) CPU Load (1min) 13-FEB-14 12.46.56 AM 4.00

10 rows selected

I know this blog posts probably lends itself to more questions. What data, other than the one showed above, do we actually have access to in Enterprise Manager? How can we obtain the information and then create reports on resource utilization for trend analysis, and capacity planning? How does Enterprise Manager allow data visualization? Which tools could I use for custom reports? Enterprise Manager does indeed monitor, keep track of, and enables the user to gather a myriad of information from each target.

The data is there.

Stay tuned for future posts which will cover the topics I have touched on in the sections above. If you are headed to Collaborate this year, and are interested in hearing further in-person, my Session # is 102 Capacity Planning: How to Leverage OEM12c for Engineered Systems.

Cheers!

Tuesday, March 11, 2014

opatch apply online on 11.2.0.4

The credit for the patch apply in the post goes to a new friend that wishes to remain anonymous, except that her initials start with KA, and who happens to work at a company in Memphis, TN. Coincidentally, that is where I had been liv. During a recent EM12c project at her company, we were going through the steps to create a 2-node RAC environment. After registering it with Enterprise Manager, and adding the relevant targets (Oracle Homes, Databases, Listeners etc) we tested the connectivity (specifically checking the tablespace usage) to one of the databases and receive an “ORA-01000 maximum open cursors” message.

The open_cursors parameter in the respective database was set to 5000, and there were hardly any connections on it at the time which could explain it. We attempted a local login (from the respective host) as well as a listener based login, both of which were successful. After a quick search on MOS, it turns out there’s a bug which causes queries from Enterprise Manager on 11.2.0.4 Databases: “EM 12c: Querying a List of tablespaces for an 11.2 Oracle Database Results in ORA-1000 Error in Enterprise Manager 12.1.0.3 Cloud Control (Doc ID 1618684.1)”. The solution, according to the MOS note, was to apply Patch 17897511 on the RDBMS home where the 11.2.0.4 instance resides.

Since I’d never tested the online patching process, it seemed like a good time to try it out. I’d recommend reading Jason Arniel’s post here on the subtle “ism’s” of online patching - I found it to be quite useful.

Let’s begin.

d-oratest11-db01.planets.com:/u01/oracle/software/patch/17897511:oratst111> /u01/oracle/product/11.2.0/db_1/OPatch/opatch apply online -connectString oratst111:sys:password:d-oratest11-db01,oratst112:sys:password:d-oratest11-db02

Oracle Interim Patch Installer version 11.2.0.3.4

Copyright (c) 2012, Oracle Corporation. All rights reserved.

Oracle Home : /u01/oracle/product/11.2.0/db_1

Central Inventory : /u01/oracle/oraInventory

from : /u01/oracle/product/11.2.0/db_1/oraInst.loc

OPatch version : 11.2.0.3.4

OUI version : 11.2.0.4.0

Log file location : /u01/oracle/product/11.2.0/db_1/cfgtoollogs/opatch/17897511_Feb_14_2014_12_28_57/apply2014-02-14_12-28-56PM_1.log

The patch should be applied/rolled back in '-all_nodes' mode only.

Converting the RAC mode to '-all_nodes' mode.

Applying interim patch '17897511' to OH '/u01/oracle/product/11.2.0/db_1'

Verifying environment and performing prerequisite checks...

All checks passed.

Backing up files...

Patching component oracle.rdbms, 11.2.0.4.0...

Installing and enabling the online patch 'bug17897511.pch', on database 'oratst111'.

Verifying the update...

Patching in all-node mode.

Updating nodes 'd-oratest11-db02'

Apply-related files are:

FP = "/u01/oracle/product/11.2.0/db_1/.patch_storage/17897511_Jan_28_2014_07_45_26/rac/copy_files.txt"

DP = "/u01/oracle/product/11.2.0/db_1/.patch_storage/17897511_Jan_28_2014_07_45_26/rac/copy_dirs.txt"

MP = "/u01/oracle/product/11.2.0/db_1/.patch_storage/17897511_Jan_28_2014_07_45_26/rac/make_cmds.txt"

RC = "/u01/oracle/product/11.2.0/db_1/.patch_storage/17897511_Jan_28_2014_07_45_26/rac/remote_cmds.txt"

Instantiating the file "/u01/oracle/product/11.2.0/db_1/.patch_storage/17897511_Jan_28_2014_07_45_26/rac/copy_files.txt.instantiated" by replacing $ORACLE_HOME in "/u01/oracle/product/11.2.0/db_1/.patch_storage/17897511_Jan_28_2014_07_45_26/rac/copy_files.txt" with actual path.

Propagating files to remote nodes...

Instantiating the file "/u01/oracle/product/11.2.0/db_1/.patch_storage/17897511_Jan_28_2014_07_45_26/rac/copy_dirs.txt.instantiated" by replacing $ORACLE_HOME in "/u01/oracle/product/11.2.0/db_1/.patch_storage/17897511_Jan_28_2014_07_45_26/rac/copy_dirs.txt" with actual path.

Propagating directories to remote nodes...

Installing and enabling the online patch 'bug17897511.pch', on database 'oratst112' on node 'd-oratest11-db02'.

Patch 17897511 successfully applied

Log file location: /u01/oracle/product/11.2.0/db_1/cfgtoollogs/opatch/17897511_Feb_14_2014_12_28_57/apply2014-02-14_12-28-56PM_1.log

OPatch succeeded.

Great! Let’s just make sure that it worked.

d-oratest11-db01.planets.com:/u01/oracle/software/patch/17897511:oratst111> /u01/oracle/product/11.2.0/db_1/OPatch/opatch lsinventory

Oracle Interim Patch Installer version 11.2.0.3.4

Copyright (c) 2012, Oracle Corporation. All rights reserved.

Oracle Home : /u01/oracle/product/11.2.0/db_1

Central Inventory : /u01/oracle/oraInventory

from : /u01/oracle/product/11.2.0/db_1/oraInst.loc

OPatch version : 11.2.0.3.4

OUI version : 11.2.0.4.0

Log file location : /u01/oracle/product/11.2.0/db_1/cfgtoollogs/opatch/opatch2014-02-14_12-29-37PM_1.log

Lsinventory Output file location : /u01/oracle/product/11.2.0/db_1/cfgtoollogs/opatch/lsinv/lsinventory2014-02-14_12-29-37PM.txt

--------------------------------------------------------------------------------

Installed Top-level Products (1):

Oracle Database 11g 11.2.0.4.0

There are 1 products installed in this Oracle Home.

Interim patches (1) :

Patch (online) 17897511: applied on Fri Feb 14 12:29:01 CST 2014

Unique Patch ID: 17213015

Created on 28 Jan 2014, 07:45:26 hrs PST8PDT

Bugs fixed:

17897511

Rac system comprising of multiple nodes

Local node = d-oratest11-db01

Remote node = d-oratest11-db02

--------------------------------------------------------------------------------

OPatch succeeded.

And, thats it. After the patch application, we were able to query the tablespace information for all databases running from this home successfully via Enterprise Manager.

Cheers!

Wednesday, February 26, 2014

Set oraInventory for EM12c Agents during GUI Installation

So, this is a peculiar problem that I had to dig to find a solution for; each time I deployed an agent to Add Targets to EM12c where no other Oracle Software was located, it would always created the oraInventory located under the $HOME directory. In my case, /home/oracle/oraInventory. This is quite annoying because for subsequent Oracle Software installs, this incorrect location is always chosen. Instead, I want it to go the, lets say /u01/oracle/oraInventory.

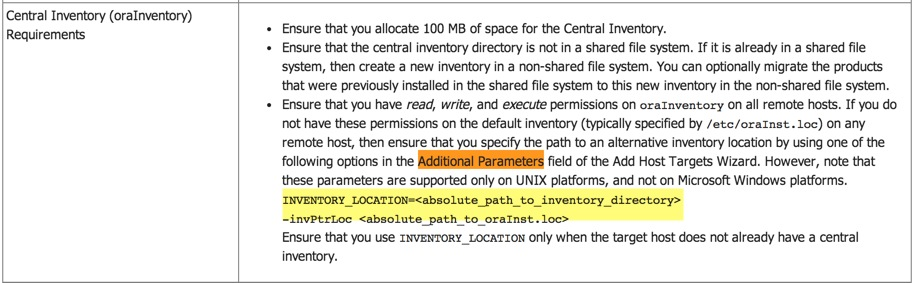

I knew there must be a way to get around this, and after digging in MOS and Oracle Docs, I found the section below. Documentation 9.4.1 section i.

To further expand on the documentation, there’s a couple of sections which describe the usage.

Looking at Table 9-2.

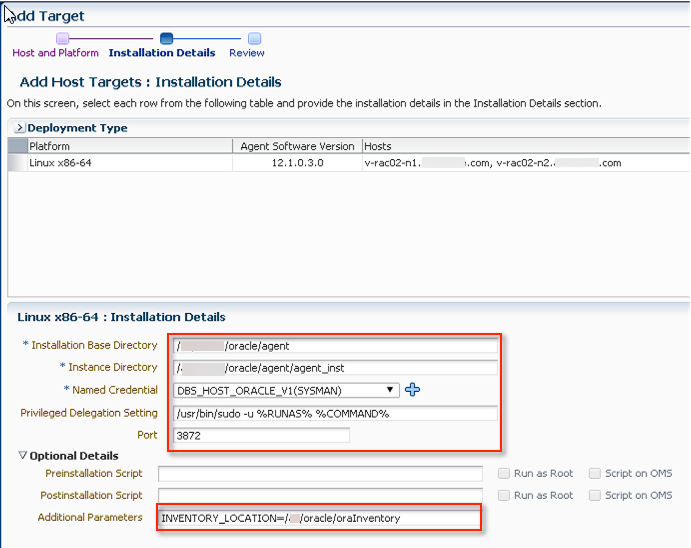

I had to give this one a try! So, after you get to the Add Targets home page, enter the Host Name(s) and find the section at the bottom for “Additional Parameters”. Simply put this section in it INVENTORY_LOCATION=/u01/oracle/oraInventory.

Proceed with the rest of the installation steps, and at its completion check the host(s) folders.

So, at the end of the day I have to give credit to the amazing documentation Oracle has put together for OEM. You just need to know what you want, then ask the right question.

Hope this helps!

Cheers.

Monday, February 17, 2014

Starting NFS quotas: Cannot register service: RPC: Unable to receive; errno = Connection refused

While trying to setup NFS on an OVM 3.2 configuration on OEL 5.9, I followed the documentation to the letter but when time came to start the NFS daemon I kept getting the error above.

[root@ovm /]# service nfs start Starting NFS services: [ OK ] Starting NFS quotas: Cannot register service: RPC: Unable to receive; errno = Connection refused rpc.rquotad: unable to register (RQUOTAPROG, RQUOTAVERS, udp). [FAILED] Starting NFS daemon: [FAILED]

After a little googling, it turns out that RPCBind may not be running.

[root@ovm /]# rpcinfo -p rpcinfo: can't contact portmapper: RPC: Remote system error - Connection refused

Perhaps I needed to update my NFS Utility packages?

[root@ovm /]# yum install nfs-utils Loaded plugins: rhnplugin, security This system is not registered with ULN. You can use up2date --register to register. ULN support will be disabled. Setting up Install Process Resolving Dependencies --> Running transaction check ---> Package nfs-utils.x86_64 1:1.0.9-70.el5 set to be updated --> Processing Dependency: initscripts >= 8.45.43 for package: nfs-utils --> Running transaction check ---> Package initscripts.x86_64 0:8.45.44-3.0.1.el5 set to be updated --> Finished Dependency Resolution Dependencies Resolved ============================================================================================================================================================= Package Arch Version Repository Size ============================================================================================================================================================= Updating: nfs-utils x86_64 1:1.0.9-70.el5 el5_latest 409 k Updating for dependencies: initscripts x86_64 8.45.44-3.0.1.el5 el5_latest 1.6 M Transaction Summary ============================================================================================================================================================= Install 0 Package(s) Upgrade 2 Package(s) Total download size: 2.0 M Is this ok [y/N]: y Downloading Packages: (1/2): nfs-utils-1.0.9-70.el5.x86_64.rpm | 409 kB 00:00 (2/2): initscripts-8.45.44-3.0.1.el5.x86_64.rpm | 1.6 MB 00:01 ------------------------------------------------------------------------------------------------------------------------------------------------------------- Total 598 kB/s | 2.0 MB 00:03 Running rpm_check_debug Running Transaction Test Finished Transaction Test Transaction Test Succeeded Running Transaction Updating : initscripts 1/4 warning: /etc/sysctl.conf created as /etc/sysctl.conf.rpmnew Updating : nfs-utils 2/4 Cleanup : nfs-utils 3/4 Cleanup : initscripts 4/4 Updated: nfs-utils.x86_64 1:1.0.9-70.el5 Dependency Updated: initscripts.x86_64 0:8.45.44-3.0.1.el5 Complete!

Then I tried the RPC info command again, but no luck!

[root@ovm /]# rpcinfo -p rpcinfo: can't contact portmapper: RPC: Remote system error - Connection refused

Portmap? Oh, that would explain a lot since NFS apparently requires port mapper service to run.

[root@ovm /]# chkconfig portmap on [root@ovm yum.repos.d]# service portmap start

Try RPC info again. Aha, that did it!

[root@ovm yum.repos.d]# rpcinfo -p program vers proto port 100000 2 tcp 111 portmapper 100000 2 udp 111 portmapper

Finally, restart the NFS Service and set it to autostart.

[root@ovm /]# service nfs start Starting NFS services: [ OK ] Starting NFS quotas: [ OK ] Starting NFS daemon: [ OK ] Starting NFS mountd: [ OK ] [root@ovm /]# chkconfig nfs on

Hope this was helpful!

Cheers!

Saturday, February 15, 2014

Oracle VM 3.2 - OVMAPI_4004E Server Failed Command: HTTP server returned unexpected status: Unauthorized access attempt

You might receive the error message below while discovering an Oracle Virtual Server:

(02/15/2014 05:57:26:942 PM) OVMAPI_4010E Attempt to send command: get_api_version to server: 192.168.78.157 failed. OVMAPI_4004E Server Failed Command: get_api_version , Status: org.apache.xmlrpc.client.XmlRpcHttpTransportException: HTTP server returned unexpected status: Unauthorized access attempt from ('192.168.78.155', 55150)! [Sat Feb 15 17:57:26 PST 2014] [Sat Feb 15 17:57:26 PST 2014]

This means that most likely your password for the agents user is incorrect. I got around this by resetting it on the Oracle Virtual Server. Thanks to Avi for posting it in the Oracle Forums!

$ ovs-agent-passwd oracle Password: Again:

Attempt to rediscover again, and it should work.

Cheers!

Wednesday, February 12, 2014

GoldenGate 12.1.2.0 MBeansContainerImpl - Runtime exception starting jAgent Server

During the EM 12.1.0.3.0 configuration to monitor GoldenGate, I stepped through the documentation (as I have numerous times in the past) but this time I kept getting the error message in the jagent.log.

2014-02-12 00:58:54 [main] INFO JAgentWSMain - About to call initialize on the WebService 2014-02-12 00:58:54 [main] INFO JAgentWSMain - Loading agent-spring-ws.xml ############### 2014-02-12 00:58:56 [main] INFO AgentInfoImpl - OEM Enabled ###### 2014-02-12 00:58:56 [main] INFO ManagerFacadeImpl - Metadata initialized 2014-02-12 00:58:56 [main] ERROR MBeansContainerImpl - Runtime exception starting jAgent Server. Jagent Host=localhost, Jagent JMX Port=5559, Jagent Config Dir=./cfg, Monitor Host=localhost, Monitor Port=15000 java.lang.NullPointerException

From my previous experience with the JAgent, I’d had to make many quirky changes in the managers parameter file. For example:

- Rename the parameter file from MGR.prm to mgr.prm

- Remove any comments from the managers parameter file that contain the word “port”

- Remove all comments from the parameter file!

None of those tricks worked because I kept getting the same error message. After the correct search on MOS yielded “OGG 12c JAGENT Fails To Initialize MBeansContainerImpl - Runtime Exception Starting JAgent Server (Doc ID 1598597.1)” suggesting that I add the line below in the Config.properties.

jagent.ssl=false

Due to the default behavior change in GoldenGate 12c (which is not stated), it is recommended that we add the line above for EM12c monitor configuration. Sure enough, once it was added the JAgent started successfully.

2014-02-12 01:04:31 [main] INFO JAgentWSMain - About to call initialize on the WebService 2014-02-12 01:04:31 [main] INFO JAgentWSMain - Loading agent-spring-ws.xml ############### 2014-02-12 01:04:33 [main] INFO AgentInfoImpl - OEM Enabled ###### 2014-02-12 01:04:33 [main] INFO ManagerFacadeImpl - Metadata initialized 2014-02-12 01:04:34 [main] INFO JAgentRmiJmxFactory - Starting JMX connector server on port 5559 2014-02-12 01:04:34 [main] INFO JAgentWSMain - JAgent finished initialization. 2014-02-12 01:04:34 [ManagerConnectionKeeper] INFO ManagerWSApi - Created WSAPI 2014-02-12 01:04:34 [ManagerConnectionKeeper] INFO MBeansContainerImpl - Start Message Polling Thread... 2014-02-12 01:04:34 [ManagerConnectionKeeper] INFO MBeansContainerImpl - Start Status Polling Thread... 2014-02-12 01:04:34 [StatusCollector] INFO ManagerWSApi - Object Id: capture:E_SMAN 2014-02-12 01:04:34 [StatusCollector] INFO ManagerWSApi - Object Id: agent:MGR 2014-02-12 01:04:34 [StatusCollector] INFO ManagerWSApi - loadManagerMonitoringPoints Getting Monitoring Points for MGR 2014-02-12 01:04:39 [getInstanceList] INFO ManagerWSApi - Object Id: capture:E_SMAN 2014-02-12 01:04:39 [getInstanceList] INFO ManagerWSApi - Object Id: agent:MGR 2014-02-12 01:04:39 [getInstanceList] INFO ManagerWSApi - loadManagerMonitoringPoints Getting Monitoring Points for MGR 2014-02-12 01:16:51 [MessageCollector] INFO MessageCollector - Processing message for GGSCI Sequence 218 2014-02-12 01:16:51 [MessageCollector] INFO MessageCollector - Processing message for GGSCI Sequence 219 2014-02-12 01:16:51 [MessageCollector] INFO MessageCollector - Flushing messages for MGR

Hope this was helpful.

Cheers!